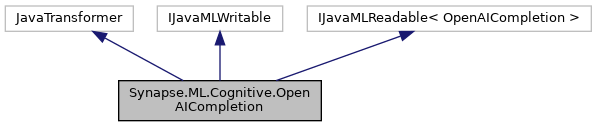

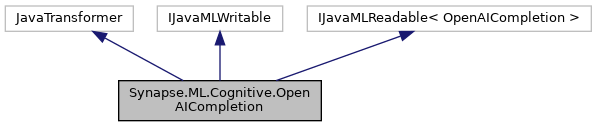

OpenAICompletion implements OpenAICompletion More...

Public Member Functions | |

| OpenAICompletion () | |

| Creates a OpenAICompletion without any parameters. More... | |

| OpenAICompletion (string uid) | |

| Creates a OpenAICompletion with a UID that is used to give the OpenAICompletion a unique ID. More... | |

| OpenAICompletion | SetApiVersion (string value) |

| Sets value for apiVersion More... | |

| OpenAICompletion | SetApiVersionCol (string value) |

| Sets value for apiVersion column More... | |

| OpenAICompletion | SetBatchIndexPrompt (int[][] value) |

| Sets value for batchIndexPrompt More... | |

| OpenAICompletion | SetBatchIndexPromptCol (string value) |

| Sets value for batchIndexPrompt column More... | |

| OpenAICompletion | SetBatchPrompt (string[] value) |

| Sets value for batchPrompt More... | |

| OpenAICompletion | SetBatchPromptCol (string value) |

| Sets value for batchPrompt column More... | |

| OpenAICompletion | SetBestOf (int value) |

| Sets value for bestOf More... | |

| OpenAICompletion | SetBestOfCol (string value) |

| Sets value for bestOf column More... | |

| OpenAICompletion | SetCacheLevel (int value) |

| Sets value for cacheLevel More... | |

| OpenAICompletion | SetCacheLevelCol (string value) |

| Sets value for cacheLevel column More... | |

| OpenAICompletion | SetConcurrency (int value) |

| Sets value for concurrency More... | |

| OpenAICompletion | SetConcurrentTimeout (double value) |

| Sets value for concurrentTimeout More... | |

| OpenAICompletion | SetDeploymentName (string value) |

| Sets value for deploymentName More... | |

| OpenAICompletion | SetDeploymentNameCol (string value) |

| Sets value for deploymentName column More... | |

| OpenAICompletion | SetEcho (bool value) |

| Sets value for echo More... | |

| OpenAICompletion | SetEchoCol (string value) |

| Sets value for echo column More... | |

| OpenAICompletion | SetErrorCol (string value) |

| Sets value for errorCol More... | |

| OpenAICompletion | SetFrequencyPenalty (double value) |

| Sets value for frequencyPenalty More... | |

| OpenAICompletion | SetFrequencyPenaltyCol (string value) |

| Sets value for frequencyPenalty column More... | |

| OpenAICompletion | SetHandler (object value) |

| Sets value for handler More... | |

| OpenAICompletion | SetIndexPrompt (int[] value) |

| Sets value for indexPrompt More... | |

| OpenAICompletion | SetIndexPromptCol (string value) |

| Sets value for indexPrompt column More... | |

| OpenAICompletion | SetLogProbs (int value) |

| Sets value for logProbs More... | |

| OpenAICompletion | SetLogProbsCol (string value) |

| Sets value for logProbs column More... | |

| OpenAICompletion | SetMaxTokens (int value) |

| Sets value for maxTokens More... | |

| OpenAICompletion | SetMaxTokensCol (string value) |

| Sets value for maxTokens column More... | |

| OpenAICompletion | SetModel (string value) |

| Sets value for model More... | |

| OpenAICompletion | SetModelCol (string value) |

| Sets value for model column More... | |

| OpenAICompletion | SetN (int value) |

| Sets value for n More... | |

| OpenAICompletion | SetNCol (string value) |

| Sets value for n column More... | |

| OpenAICompletion | SetOutputCol (string value) |

| Sets value for outputCol More... | |

| OpenAICompletion | SetPresencePenalty (double value) |

| Sets value for presencePenalty More... | |

| OpenAICompletion | SetPresencePenaltyCol (string value) |

| Sets value for presencePenalty column More... | |

| OpenAICompletion | SetPrompt (string value) |

| Sets value for prompt More... | |

| OpenAICompletion | SetPromptCol (string value) |

| Sets value for prompt column More... | |

| OpenAICompletion | SetStop (string value) |

| Sets value for stop More... | |

| OpenAICompletion | SetStopCol (string value) |

| Sets value for stop column More... | |

| OpenAICompletion | SetSubscriptionKey (string value) |

| Sets value for subscriptionKey More... | |

| OpenAICompletion | SetSubscriptionKeyCol (string value) |

| Sets value for subscriptionKey column More... | |

| OpenAICompletion | SetTemperature (double value) |

| Sets value for temperature More... | |

| OpenAICompletion | SetTemperatureCol (string value) |

| Sets value for temperature column More... | |

| OpenAICompletion | SetTimeout (double value) |

| Sets value for timeout More... | |

| OpenAICompletion | SetTopP (double value) |

| Sets value for topP More... | |

| OpenAICompletion | SetTopPCol (string value) |

| Sets value for topP column More... | |

| OpenAICompletion | SetUrl (string value) |

| Sets value for url More... | |

| OpenAICompletion | SetUser (string value) |

| Sets value for user More... | |

| OpenAICompletion | SetUserCol (string value) |

| Sets value for user column More... | |

| string | GetApiVersion () |

| Gets apiVersion value More... | |

| int [][] | GetBatchIndexPrompt () |

| Gets batchIndexPrompt value More... | |

| string [] | GetBatchPrompt () |

| Gets batchPrompt value More... | |

| int | GetBestOf () |

| Gets bestOf value More... | |

| int | GetCacheLevel () |

| Gets cacheLevel value More... | |

| int | GetConcurrency () |

| Gets concurrency value More... | |

| double | GetConcurrentTimeout () |

| Gets concurrentTimeout value More... | |

| string | GetDeploymentName () |

| Gets deploymentName value More... | |

| bool | GetEcho () |

| Gets echo value More... | |

| string | GetErrorCol () |

| Gets errorCol value More... | |

| double | GetFrequencyPenalty () |

| Gets frequencyPenalty value More... | |

| object | GetHandler () |

| Gets handler value More... | |

| int [] | GetIndexPrompt () |

| Gets indexPrompt value More... | |

| int | GetLogProbs () |

| Gets logProbs value More... | |

| int | GetMaxTokens () |

| Gets maxTokens value More... | |

| string | GetModel () |

| Gets model value More... | |

| int | GetN () |

| Gets n value More... | |

| string | GetOutputCol () |

| Gets outputCol value More... | |

| double | GetPresencePenalty () |

| Gets presencePenalty value More... | |

| string | GetPrompt () |

| Gets prompt value More... | |

| string | GetStop () |

| Gets stop value More... | |

| string | GetSubscriptionKey () |

| Gets subscriptionKey value More... | |

| double | GetTemperature () |

| Gets temperature value More... | |

| double | GetTimeout () |

| Gets timeout value More... | |

| double | GetTopP () |

| Gets topP value More... | |

| string | GetUrl () |

| Gets url value More... | |

| string | GetUser () |

| Gets user value More... | |

| void | Save (string path) |

| Saves the object so that it can be loaded later using Load. Note that these objects can be shared with Scala by Loading or Saving in Scala. More... | |

| JavaMLWriter | Write () |

| |

| JavaMLReader< OpenAICompletion > | Read () |

| Get the corresponding JavaMLReader instance. More... | |

Static Public Member Functions | |

| static OpenAICompletion | Load (string path) |

| Loads the OpenAICompletion that was previously saved using Save(string). More... | |

Detailed Description

OpenAICompletion implements OpenAICompletion

Constructor & Destructor Documentation

◆ OpenAICompletion() [1/2]

|

inline |

Creates a OpenAICompletion without any parameters.

◆ OpenAICompletion() [2/2]

|

inline |

Creates a OpenAICompletion with a UID that is used to give the OpenAICompletion a unique ID.

- Parameters

-

uid An immutable unique ID for the object and its derivatives.

Member Function Documentation

◆ GetApiVersion()

| string Synapse.ML.Cognitive.OpenAICompletion.GetApiVersion | ( | ) |

Gets apiVersion value

- Returns

- apiVersion: version of the api

◆ GetBatchIndexPrompt()

| int [][] Synapse.ML.Cognitive.OpenAICompletion.GetBatchIndexPrompt | ( | ) |

Gets batchIndexPrompt value

- Returns

- batchIndexPrompt: Sequence of index sequences to complete

◆ GetBatchPrompt()

| string [] Synapse.ML.Cognitive.OpenAICompletion.GetBatchPrompt | ( | ) |

Gets batchPrompt value

- Returns

- batchPrompt: Sequence of prompts to complete

◆ GetBestOf()

| int Synapse.ML.Cognitive.OpenAICompletion.GetBestOf | ( | ) |

Gets bestOf value

- Returns

- bestOf: How many generations to create server side, and display only the best. Will not stream intermediate progress if best_of > 1. Has maximum value of 128.

◆ GetCacheLevel()

| int Synapse.ML.Cognitive.OpenAICompletion.GetCacheLevel | ( | ) |

Gets cacheLevel value

- Returns

- cacheLevel: can be used to disable any server-side caching, 0=no cache, 1=prompt prefix enabled, 2=full cache

◆ GetConcurrency()

| int Synapse.ML.Cognitive.OpenAICompletion.GetConcurrency | ( | ) |

Gets concurrency value

- Returns

- concurrency: max number of concurrent calls

◆ GetConcurrentTimeout()

| double Synapse.ML.Cognitive.OpenAICompletion.GetConcurrentTimeout | ( | ) |

Gets concurrentTimeout value

- Returns

- concurrentTimeout: max number seconds to wait on futures if concurrency >= 1

◆ GetDeploymentName()

| string Synapse.ML.Cognitive.OpenAICompletion.GetDeploymentName | ( | ) |

Gets deploymentName value

- Returns

- deploymentName: The name of the deployment

◆ GetEcho()

| bool Synapse.ML.Cognitive.OpenAICompletion.GetEcho | ( | ) |

Gets echo value

- Returns

- echo: Echo back the prompt in addition to the completion

◆ GetErrorCol()

| string Synapse.ML.Cognitive.OpenAICompletion.GetErrorCol | ( | ) |

Gets errorCol value

- Returns

- errorCol: column to hold http errors

◆ GetFrequencyPenalty()

| double Synapse.ML.Cognitive.OpenAICompletion.GetFrequencyPenalty | ( | ) |

Gets frequencyPenalty value

- Returns

- frequencyPenalty: How much to penalize new tokens based on whether they appear in the text so far. Increases the model's likelihood to talk about new topics.

◆ GetHandler()

| object Synapse.ML.Cognitive.OpenAICompletion.GetHandler | ( | ) |

Gets handler value

- Returns

- handler: Which strategy to use when handling requests

◆ GetIndexPrompt()

| int [] Synapse.ML.Cognitive.OpenAICompletion.GetIndexPrompt | ( | ) |

Gets indexPrompt value

- Returns

- indexPrompt: Sequence of indexes to complete

◆ GetLogProbs()

| int Synapse.ML.Cognitive.OpenAICompletion.GetLogProbs | ( | ) |

Gets logProbs value

- Returns

- logProbs: Include the log probabilities on the

logprobsmost likely tokens, as well the chosen tokens. So for example, iflogprobsis 10, the API will return a list of the 10 most likely tokens. Iflogprobsis 0, only the chosen tokens will have logprobs returned. Minimum of 0 and maximum of 100 allowed.

◆ GetMaxTokens()

| int Synapse.ML.Cognitive.OpenAICompletion.GetMaxTokens | ( | ) |

Gets maxTokens value

- Returns

- maxTokens: The maximum number of tokens to generate. Has minimum of 0.

◆ GetModel()

| string Synapse.ML.Cognitive.OpenAICompletion.GetModel | ( | ) |

Gets model value

- Returns

- model: The name of the model to use

◆ GetN()

| int Synapse.ML.Cognitive.OpenAICompletion.GetN | ( | ) |

Gets n value

- Returns

- n: How many snippets to generate for each prompt. Minimum of 1 and maximum of 128 allowed.

◆ GetOutputCol()

| string Synapse.ML.Cognitive.OpenAICompletion.GetOutputCol | ( | ) |

Gets outputCol value

- Returns

- outputCol: The name of the output column

◆ GetPresencePenalty()

| double Synapse.ML.Cognitive.OpenAICompletion.GetPresencePenalty | ( | ) |

Gets presencePenalty value

- Returns

- presencePenalty: How much to penalize new tokens based on their existing frequency in the text so far. Decreases the model's likelihood to repeat the same line verbatim. Has minimum of -2 and maximum of 2.

◆ GetPrompt()

| string Synapse.ML.Cognitive.OpenAICompletion.GetPrompt | ( | ) |

Gets prompt value

- Returns

- prompt: The text to complete

◆ GetStop()

| string Synapse.ML.Cognitive.OpenAICompletion.GetStop | ( | ) |

Gets stop value

- Returns

- stop: A sequence which indicates the end of the current document.

◆ GetSubscriptionKey()

| string Synapse.ML.Cognitive.OpenAICompletion.GetSubscriptionKey | ( | ) |

Gets subscriptionKey value

- Returns

- subscriptionKey: the API key to use

◆ GetTemperature()

| double Synapse.ML.Cognitive.OpenAICompletion.GetTemperature | ( | ) |

Gets temperature value

- Returns

- temperature: What sampling temperature to use. Higher values means the model will take more risks. Try 0.9 for more creative applications, and 0 (argmax sampling) for ones with a well-defined answer. We generally recommend using this or

top_pbut not both. Minimum of 0 and maximum of 2 allowed.

◆ GetTimeout()

| double Synapse.ML.Cognitive.OpenAICompletion.GetTimeout | ( | ) |

Gets timeout value

- Returns

- timeout: number of seconds to wait before closing the connection

◆ GetTopP()

| double Synapse.ML.Cognitive.OpenAICompletion.GetTopP | ( | ) |

Gets topP value

- Returns

- topP: An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass. So 0.1 means only the tokens comprising the top 10% probability mass are considered. We generally recommend using this or

temperaturebut not both. Minimum of 0 and maximum of 1 allowed.

◆ GetUrl()

| string Synapse.ML.Cognitive.OpenAICompletion.GetUrl | ( | ) |

Gets url value

- Returns

- url: Url of the service

◆ GetUser()

| string Synapse.ML.Cognitive.OpenAICompletion.GetUser | ( | ) |

Gets user value

- Returns

- user: The ID of the end-user, for use in tracking and rate-limiting.

◆ Load()

|

static |

Loads the OpenAICompletion that was previously saved using Save(string).

- Parameters

-

path The path the previous OpenAICompletion was saved to

- Returns

- New OpenAICompletion object, loaded from path.

◆ Read()

| JavaMLReader<OpenAICompletion> Synapse.ML.Cognitive.OpenAICompletion.Read | ( | ) |

Get the corresponding JavaMLReader instance.

- Returns

- an JavaMLReader<OpenAICompletion> instance for this ML instance.

◆ Save()

| void Synapse.ML.Cognitive.OpenAICompletion.Save | ( | string | path | ) |

Saves the object so that it can be loaded later using Load. Note that these objects can be shared with Scala by Loading or Saving in Scala.

- Parameters

-

path The path to save the object to

◆ SetApiVersion()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetApiVersion | ( | string | value | ) |

◆ SetApiVersionCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetApiVersionCol | ( | string | value | ) |

Sets value for apiVersion column

- Parameters

-

value version of the api

- Returns

- New OpenAICompletion object

◆ SetBatchIndexPrompt()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetBatchIndexPrompt | ( | int | value[][] | ) |

Sets value for batchIndexPrompt

- Parameters

-

value Sequence of index sequences to complete

- Returns

- New OpenAICompletion object

◆ SetBatchIndexPromptCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetBatchIndexPromptCol | ( | string | value | ) |

Sets value for batchIndexPrompt column

- Parameters

-

value Sequence of index sequences to complete

- Returns

- New OpenAICompletion object

◆ SetBatchPrompt()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetBatchPrompt | ( | string [] | value | ) |

Sets value for batchPrompt

- Parameters

-

value Sequence of prompts to complete

- Returns

- New OpenAICompletion object

◆ SetBatchPromptCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetBatchPromptCol | ( | string | value | ) |

Sets value for batchPrompt column

- Parameters

-

value Sequence of prompts to complete

- Returns

- New OpenAICompletion object

◆ SetBestOf()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetBestOf | ( | int | value | ) |

Sets value for bestOf

- Parameters

-

value How many generations to create server side, and display only the best. Will not stream intermediate progress if best_of > 1. Has maximum value of 128.

- Returns

- New OpenAICompletion object

◆ SetBestOfCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetBestOfCol | ( | string | value | ) |

Sets value for bestOf column

- Parameters

-

value How many generations to create server side, and display only the best. Will not stream intermediate progress if best_of > 1. Has maximum value of 128.

- Returns

- New OpenAICompletion object

◆ SetCacheLevel()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetCacheLevel | ( | int | value | ) |

Sets value for cacheLevel

- Parameters

-

value can be used to disable any server-side caching, 0=no cache, 1=prompt prefix enabled, 2=full cache

- Returns

- New OpenAICompletion object

◆ SetCacheLevelCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetCacheLevelCol | ( | string | value | ) |

Sets value for cacheLevel column

- Parameters

-

value can be used to disable any server-side caching, 0=no cache, 1=prompt prefix enabled, 2=full cache

- Returns

- New OpenAICompletion object

◆ SetConcurrency()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetConcurrency | ( | int | value | ) |

Sets value for concurrency

- Parameters

-

value max number of concurrent calls

- Returns

- New OpenAICompletion object

◆ SetConcurrentTimeout()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetConcurrentTimeout | ( | double | value | ) |

Sets value for concurrentTimeout

- Parameters

-

value max number seconds to wait on futures if concurrency >= 1

- Returns

- New OpenAICompletion object

◆ SetDeploymentName()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetDeploymentName | ( | string | value | ) |

Sets value for deploymentName

- Parameters

-

value The name of the deployment

- Returns

- New OpenAICompletion object

◆ SetDeploymentNameCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetDeploymentNameCol | ( | string | value | ) |

Sets value for deploymentName column

- Parameters

-

value The name of the deployment

- Returns

- New OpenAICompletion object

◆ SetEcho()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetEcho | ( | bool | value | ) |

Sets value for echo

- Parameters

-

value Echo back the prompt in addition to the completion

- Returns

- New OpenAICompletion object

◆ SetEchoCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetEchoCol | ( | string | value | ) |

Sets value for echo column

- Parameters

-

value Echo back the prompt in addition to the completion

- Returns

- New OpenAICompletion object

◆ SetErrorCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetErrorCol | ( | string | value | ) |

Sets value for errorCol

- Parameters

-

value column to hold http errors

- Returns

- New OpenAICompletion object

◆ SetFrequencyPenalty()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetFrequencyPenalty | ( | double | value | ) |

Sets value for frequencyPenalty

- Parameters

-

value How much to penalize new tokens based on whether they appear in the text so far. Increases the model's likelihood to talk about new topics.

- Returns

- New OpenAICompletion object

◆ SetFrequencyPenaltyCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetFrequencyPenaltyCol | ( | string | value | ) |

Sets value for frequencyPenalty column

- Parameters

-

value How much to penalize new tokens based on whether they appear in the text so far. Increases the model's likelihood to talk about new topics.

- Returns

- New OpenAICompletion object

◆ SetHandler()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetHandler | ( | object | value | ) |

Sets value for handler

- Parameters

-

value Which strategy to use when handling requests

- Returns

- New OpenAICompletion object

◆ SetIndexPrompt()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetIndexPrompt | ( | int [] | value | ) |

Sets value for indexPrompt

- Parameters

-

value Sequence of indexes to complete

- Returns

- New OpenAICompletion object

◆ SetIndexPromptCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetIndexPromptCol | ( | string | value | ) |

Sets value for indexPrompt column

- Parameters

-

value Sequence of indexes to complete

- Returns

- New OpenAICompletion object

◆ SetLogProbs()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetLogProbs | ( | int | value | ) |

Sets value for logProbs

- Parameters

-

value Include the log probabilities on the logprobsmost likely tokens, as well the chosen tokens. So for example, iflogprobsis 10, the API will return a list of the 10 most likely tokens. Iflogprobsis 0, only the chosen tokens will have logprobs returned. Minimum of 0 and maximum of 100 allowed.

- Returns

- New OpenAICompletion object

◆ SetLogProbsCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetLogProbsCol | ( | string | value | ) |

Sets value for logProbs column

- Parameters

-

value Include the log probabilities on the logprobsmost likely tokens, as well the chosen tokens. So for example, iflogprobsis 10, the API will return a list of the 10 most likely tokens. Iflogprobsis 0, only the chosen tokens will have logprobs returned. Minimum of 0 and maximum of 100 allowed.

- Returns

- New OpenAICompletion object

◆ SetMaxTokens()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetMaxTokens | ( | int | value | ) |

Sets value for maxTokens

- Parameters

-

value The maximum number of tokens to generate. Has minimum of 0.

- Returns

- New OpenAICompletion object

◆ SetMaxTokensCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetMaxTokensCol | ( | string | value | ) |

Sets value for maxTokens column

- Parameters

-

value The maximum number of tokens to generate. Has minimum of 0.

- Returns

- New OpenAICompletion object

◆ SetModel()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetModel | ( | string | value | ) |

Sets value for model

- Parameters

-

value The name of the model to use

- Returns

- New OpenAICompletion object

◆ SetModelCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetModelCol | ( | string | value | ) |

Sets value for model column

- Parameters

-

value The name of the model to use

- Returns

- New OpenAICompletion object

◆ SetN()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetN | ( | int | value | ) |

Sets value for n

- Parameters

-

value How many snippets to generate for each prompt. Minimum of 1 and maximum of 128 allowed.

- Returns

- New OpenAICompletion object

◆ SetNCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetNCol | ( | string | value | ) |

Sets value for n column

- Parameters

-

value How many snippets to generate for each prompt. Minimum of 1 and maximum of 128 allowed.

- Returns

- New OpenAICompletion object

◆ SetOutputCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetOutputCol | ( | string | value | ) |

Sets value for outputCol

- Parameters

-

value The name of the output column

- Returns

- New OpenAICompletion object

◆ SetPresencePenalty()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetPresencePenalty | ( | double | value | ) |

Sets value for presencePenalty

- Parameters

-

value How much to penalize new tokens based on their existing frequency in the text so far. Decreases the model's likelihood to repeat the same line verbatim. Has minimum of -2 and maximum of 2.

- Returns

- New OpenAICompletion object

◆ SetPresencePenaltyCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetPresencePenaltyCol | ( | string | value | ) |

Sets value for presencePenalty column

- Parameters

-

value How much to penalize new tokens based on their existing frequency in the text so far. Decreases the model's likelihood to repeat the same line verbatim. Has minimum of -2 and maximum of 2.

- Returns

- New OpenAICompletion object

◆ SetPrompt()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetPrompt | ( | string | value | ) |

◆ SetPromptCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetPromptCol | ( | string | value | ) |

Sets value for prompt column

- Parameters

-

value The text to complete

- Returns

- New OpenAICompletion object

◆ SetStop()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetStop | ( | string | value | ) |

Sets value for stop

- Parameters

-

value A sequence which indicates the end of the current document.

- Returns

- New OpenAICompletion object

◆ SetStopCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetStopCol | ( | string | value | ) |

Sets value for stop column

- Parameters

-

value A sequence which indicates the end of the current document.

- Returns

- New OpenAICompletion object

◆ SetSubscriptionKey()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetSubscriptionKey | ( | string | value | ) |

Sets value for subscriptionKey

- Parameters

-

value the API key to use

- Returns

- New OpenAICompletion object

◆ SetSubscriptionKeyCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetSubscriptionKeyCol | ( | string | value | ) |

Sets value for subscriptionKey column

- Parameters

-

value the API key to use

- Returns

- New OpenAICompletion object

◆ SetTemperature()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetTemperature | ( | double | value | ) |

Sets value for temperature

- Parameters

-

value What sampling temperature to use. Higher values means the model will take more risks. Try 0.9 for more creative applications, and 0 (argmax sampling) for ones with a well-defined answer. We generally recommend using this or top_pbut not both. Minimum of 0 and maximum of 2 allowed.

- Returns

- New OpenAICompletion object

◆ SetTemperatureCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetTemperatureCol | ( | string | value | ) |

Sets value for temperature column

- Parameters

-

value What sampling temperature to use. Higher values means the model will take more risks. Try 0.9 for more creative applications, and 0 (argmax sampling) for ones with a well-defined answer. We generally recommend using this or top_pbut not both. Minimum of 0 and maximum of 2 allowed.

- Returns

- New OpenAICompletion object

◆ SetTimeout()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetTimeout | ( | double | value | ) |

Sets value for timeout

- Parameters

-

value number of seconds to wait before closing the connection

- Returns

- New OpenAICompletion object

◆ SetTopP()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetTopP | ( | double | value | ) |

Sets value for topP

- Parameters

-

value An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass. So 0.1 means only the tokens comprising the top 10% probability mass are considered. We generally recommend using this or temperaturebut not both. Minimum of 0 and maximum of 1 allowed.

- Returns

- New OpenAICompletion object

◆ SetTopPCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetTopPCol | ( | string | value | ) |

Sets value for topP column

- Parameters

-

value An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass. So 0.1 means only the tokens comprising the top 10% probability mass are considered. We generally recommend using this or temperaturebut not both. Minimum of 0 and maximum of 1 allowed.

- Returns

- New OpenAICompletion object

◆ SetUrl()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetUrl | ( | string | value | ) |

◆ SetUser()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetUser | ( | string | value | ) |

Sets value for user

- Parameters

-

value The ID of the end-user, for use in tracking and rate-limiting.

- Returns

- New OpenAICompletion object

◆ SetUserCol()

| OpenAICompletion Synapse.ML.Cognitive.OpenAICompletion.SetUserCol | ( | string | value | ) |

Sets value for user column

- Parameters

-

value The ID of the end-user, for use in tracking and rate-limiting.

- Returns

- New OpenAICompletion object

The documentation for this class was generated from the following file:

- synapse/ml/cognitive/OpenAICompletion.cs

1.8.13

1.8.13