ONNXModel implements ONNXModel More...

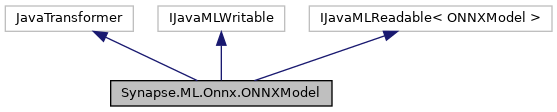

Inheritance diagram for Synapse.ML.Onnx.ONNXModel:

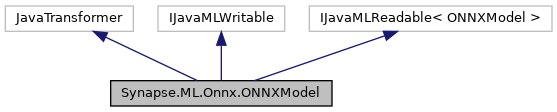

Collaboration diagram for Synapse.ML.Onnx.ONNXModel:

Public Member Functions | |

| ONNXModel () | |

| Creates a ONNXModel without any parameters. More... | |

| ONNXModel (string uid) | |

| Creates a ONNXModel with a UID that is used to give the ONNXModel a unique ID. More... | |

| ONNXModel | SetArgMaxDict (Dictionary< string, string > value) |

| Sets value for argMaxDict More... | |

| ONNXModel | SetDeviceType (string value) |

| Sets value for deviceType More... | |

| ONNXModel | SetFeedDict (Dictionary< string, string > value) |

| Sets value for feedDict More... | |

| ONNXModel | SetFetchDict (Dictionary< string, string > value) |

| Sets value for fetchDict More... | |

| ONNXModel | SetMiniBatcher (JavaTransformer value) |

| Sets value for miniBatcher More... | |

| ONNXModel | SetModelPayload (object value) |

| Sets value for modelPayload More... | |

| ONNXModel | SetOptimizationLevel (string value) |

| Sets value for optimizationLevel More... | |

| ONNXModel | SetSoftMaxDict (Dictionary< string, string > value) |

| Sets value for softMaxDict More... | |

| Dictionary< string, string > | GetArgMaxDict () |

| Gets argMaxDict value More... | |

| string | GetDeviceType () |

| Gets deviceType value More... | |

| Dictionary< string, string > | GetFeedDict () |

| Gets feedDict value More... | |

| Dictionary< string, string > | GetFetchDict () |

| Gets fetchDict value More... | |

| JavaTransformer | GetMiniBatcher () |

| Gets miniBatcher value More... | |

| object | GetModelPayload () |

| Gets modelPayload value More... | |

| string | GetOptimizationLevel () |

| Gets optimizationLevel value More... | |

| Dictionary< string, string > | GetSoftMaxDict () |

| Gets softMaxDict value More... | |

| void | Save (string path) |

| Saves the object so that it can be loaded later using Load. Note that these objects can be shared with Scala by Loading or Saving in Scala. More... | |

| JavaMLWriter | Write () |

| |

| JavaMLReader< ONNXModel > | Read () |

| Get the corresponding JavaMLReader instance. More... | |

Static Public Member Functions | |

| static ONNXModel | Load (string path) |

| Loads the ONNXModel that was previously saved using Save(string). More... | |

Detailed Description

Constructor & Destructor Documentation

◆ ONNXModel() [1/2]

|

inline |

Creates a ONNXModel without any parameters.

◆ ONNXModel() [2/2]

|

inline |

Member Function Documentation

◆ GetArgMaxDict()

|

inline |

Gets argMaxDict value

- Returns

- argMaxDict: A map between output dataframe columns, where the value column will be computed from taking the argmax of the key column. This can be used to convert probability output to predicted label.

◆ GetDeviceType()

| string Synapse.ML.Onnx.ONNXModel.GetDeviceType | ( | ) |

Gets deviceType value

- Returns

- deviceType: Specify a device type the model inference runs on. Supported types are: CPU or CUDA.If not specified, auto detection will be used.

◆ GetFeedDict()

|

inline |

Gets feedDict value

- Returns

- feedDict: Provide a map from CNTK/ONNX model input variable names (keys) to column names of the input dataframe (values)

◆ GetFetchDict()

|

inline |

Gets fetchDict value

- Returns

- fetchDict: Provide a map from column names of the output dataframe (keys) to CNTK/ONNX model output variable names (values)

◆ GetMiniBatcher()

|

inline |

Gets miniBatcher value

- Returns

- miniBatcher: Minibatcher to use

◆ GetModelPayload()

| object Synapse.ML.Onnx.ONNXModel.GetModelPayload | ( | ) |

Gets modelPayload value

- Returns

- modelPayload: Array of bytes containing the serialized ONNX model.

◆ GetOptimizationLevel()

| string Synapse.ML.Onnx.ONNXModel.GetOptimizationLevel | ( | ) |

Gets optimizationLevel value

- Returns

- optimizationLevel: Specify the optimization level for the ONNX graph optimizations. Details at https://onnxruntime.ai/docs/resources/graph-optimizations.html#graph-optimization-levels. Supported values are: NO_OPT; BASIC_OPT; EXTENDED_OPT; ALL_OPT. Default: ALL_OPT.

◆ GetSoftMaxDict()

|

inline |

Gets softMaxDict value

- Returns

- softMaxDict: A map between output dataframe columns, where the value column will be computed from taking the softmax of the key column. If the 'rawPrediction' column contains logits outputs, then one can set softMaxDict to

Map("rawPrediction" -> "probability")to obtain the probability outputs.

◆ Load()

|

static |

Loads the ONNXModel that was previously saved using Save(string).

- Parameters

-

path The path the previous ONNXModel was saved to

- Returns

- New ONNXModel object, loaded from path.

◆ Read()

| JavaMLReader<ONNXModel> Synapse.ML.Onnx.ONNXModel.Read | ( | ) |

Get the corresponding JavaMLReader instance.

- Returns

- an JavaMLReader<ONNXModel> instance for this ML instance.

◆ Save()

| void Synapse.ML.Onnx.ONNXModel.Save | ( | string | path | ) |

Saves the object so that it can be loaded later using Load. Note that these objects can be shared with Scala by Loading or Saving in Scala.

- Parameters

-

path The path to save the object to

◆ SetArgMaxDict()

| ONNXModel Synapse.ML.Onnx.ONNXModel.SetArgMaxDict | ( | Dictionary< string, string > | value | ) |

Sets value for argMaxDict

- Parameters

-

value A map between output dataframe columns, where the value column will be computed from taking the argmax of the key column. This can be used to convert probability output to predicted label.

- Returns

- New ONNXModel object

◆ SetDeviceType()

| ONNXModel Synapse.ML.Onnx.ONNXModel.SetDeviceType | ( | string | value | ) |

Sets value for deviceType

- Parameters

-

value Specify a device type the model inference runs on. Supported types are: CPU or CUDA.If not specified, auto detection will be used.

- Returns

- New ONNXModel object

◆ SetFeedDict()

| ONNXModel Synapse.ML.Onnx.ONNXModel.SetFeedDict | ( | Dictionary< string, string > | value | ) |

Sets value for feedDict

- Parameters

-

value Provide a map from CNTK/ONNX model input variable names (keys) to column names of the input dataframe (values)

- Returns

- New ONNXModel object

◆ SetFetchDict()

| ONNXModel Synapse.ML.Onnx.ONNXModel.SetFetchDict | ( | Dictionary< string, string > | value | ) |

Sets value for fetchDict

- Parameters

-

value Provide a map from column names of the output dataframe (keys) to CNTK/ONNX model output variable names (values)

- Returns

- New ONNXModel object

◆ SetMiniBatcher()

| ONNXModel Synapse.ML.Onnx.ONNXModel.SetMiniBatcher | ( | JavaTransformer | value | ) |

◆ SetModelPayload()

| ONNXModel Synapse.ML.Onnx.ONNXModel.SetModelPayload | ( | object | value | ) |

Sets value for modelPayload

- Parameters

-

value Array of bytes containing the serialized ONNX model.

- Returns

- New ONNXModel object

◆ SetOptimizationLevel()

| ONNXModel Synapse.ML.Onnx.ONNXModel.SetOptimizationLevel | ( | string | value | ) |

Sets value for optimizationLevel

- Parameters

-

value Specify the optimization level for the ONNX graph optimizations. Details at https://onnxruntime.ai/docs/resources/graph-optimizations.html#graph-optimization-levels. Supported values are: NO_OPT; BASIC_OPT; EXTENDED_OPT; ALL_OPT. Default: ALL_OPT.

- Returns

- New ONNXModel object

◆ SetSoftMaxDict()

| ONNXModel Synapse.ML.Onnx.ONNXModel.SetSoftMaxDict | ( | Dictionary< string, string > | value | ) |

Sets value for softMaxDict

- Parameters

-

value A map between output dataframe columns, where the value column will be computed from taking the softmax of the key column. If the 'rawPrediction' column contains logits outputs, then one can set softMaxDict to Map("rawPrediction" -> "probability")to obtain the probability outputs.

- Returns

- New ONNXModel object

The documentation for this class was generated from the following file:

- synapse/ml/onnx/ONNXModel.cs

1.8.17

1.8.17