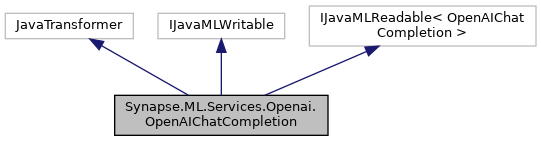

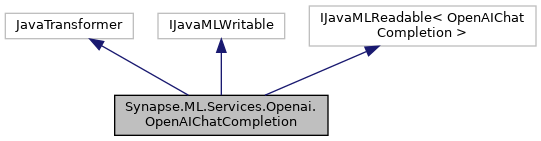

OpenAIChatCompletion implements OpenAIChatCompletion More...

Public Member Functions | |

| OpenAIChatCompletion () | |

| Creates a OpenAIChatCompletion without any parameters. More... | |

| OpenAIChatCompletion (string uid) | |

| Creates a OpenAIChatCompletion with a UID that is used to give the OpenAIChatCompletion a unique ID. More... | |

| OpenAIChatCompletion | SetAADToken (string value) |

| Sets value for AADToken More... | |

| OpenAIChatCompletion | SetAADTokenCol (string value) |

| Sets value for AADToken column More... | |

| OpenAIChatCompletion | SetCustomAuthHeader (string value) |

| Sets value for CustomAuthHeader More... | |

| OpenAIChatCompletion | SetCustomAuthHeaderCol (string value) |

| Sets value for CustomAuthHeader column More... | |

| OpenAIChatCompletion | SetApiVersion (string value) |

| Sets value for apiVersion More... | |

| OpenAIChatCompletion | SetApiVersionCol (string value) |

| Sets value for apiVersion column More... | |

| OpenAIChatCompletion | SetBestOf (int value) |

| Sets value for bestOf More... | |

| OpenAIChatCompletion | SetBestOfCol (string value) |

| Sets value for bestOf column More... | |

| OpenAIChatCompletion | SetCacheLevel (int value) |

| Sets value for cacheLevel More... | |

| OpenAIChatCompletion | SetCacheLevelCol (string value) |

| Sets value for cacheLevel column More... | |

| OpenAIChatCompletion | SetConcurrency (int value) |

| Sets value for concurrency More... | |

| OpenAIChatCompletion | SetConcurrentTimeout (double value) |

| Sets value for concurrentTimeout More... | |

| OpenAIChatCompletion | SetDeploymentName (string value) |

| Sets value for deploymentName More... | |

| OpenAIChatCompletion | SetDeploymentNameCol (string value) |

| Sets value for deploymentName column More... | |

| OpenAIChatCompletion | SetEcho (bool value) |

| Sets value for echo More... | |

| OpenAIChatCompletion | SetEchoCol (string value) |

| Sets value for echo column More... | |

| OpenAIChatCompletion | SetErrorCol (string value) |

| Sets value for errorCol More... | |

| OpenAIChatCompletion | SetFrequencyPenalty (double value) |

| Sets value for frequencyPenalty More... | |

| OpenAIChatCompletion | SetFrequencyPenaltyCol (string value) |

| Sets value for frequencyPenalty column More... | |

| OpenAIChatCompletion | SetHandler (object value) |

| Sets value for handler More... | |

| OpenAIChatCompletion | SetLogProbs (int value) |

| Sets value for logProbs More... | |

| OpenAIChatCompletion | SetLogProbsCol (string value) |

| Sets value for logProbs column More... | |

| OpenAIChatCompletion | SetMaxTokens (int value) |

| Sets value for maxTokens More... | |

| OpenAIChatCompletion | SetMaxTokensCol (string value) |

| Sets value for maxTokens column More... | |

| OpenAIChatCompletion | SetMessagesCol (string value) |

| Sets value for messagesCol More... | |

| OpenAIChatCompletion | SetN (int value) |

| Sets value for n More... | |

| OpenAIChatCompletion | SetNCol (string value) |

| Sets value for n column More... | |

| OpenAIChatCompletion | SetOutputCol (string value) |

| Sets value for outputCol More... | |

| OpenAIChatCompletion | SetPresencePenalty (double value) |

| Sets value for presencePenalty More... | |

| OpenAIChatCompletion | SetPresencePenaltyCol (string value) |

| Sets value for presencePenalty column More... | |

| OpenAIChatCompletion | SetStop (string value) |

| Sets value for stop More... | |

| OpenAIChatCompletion | SetStopCol (string value) |

| Sets value for stop column More... | |

| OpenAIChatCompletion | SetSubscriptionKey (string value) |

| Sets value for subscriptionKey More... | |

| OpenAIChatCompletion | SetSubscriptionKeyCol (string value) |

| Sets value for subscriptionKey column More... | |

| OpenAIChatCompletion | SetTemperature (double value) |

| Sets value for temperature More... | |

| OpenAIChatCompletion | SetTemperatureCol (string value) |

| Sets value for temperature column More... | |

| OpenAIChatCompletion | SetTimeout (double value) |

| Sets value for timeout More... | |

| OpenAIChatCompletion | SetTopP (double value) |

| Sets value for topP More... | |

| OpenAIChatCompletion | SetTopPCol (string value) |

| Sets value for topP column More... | |

| OpenAIChatCompletion | SetUrl (string value) |

| Sets value for url More... | |

| OpenAIChatCompletion | SetUser (string value) |

| Sets value for user More... | |

| OpenAIChatCompletion | SetUserCol (string value) |

| Sets value for user column More... | |

| string | GetAADToken () |

| Gets AADToken value More... | |

| string | GetCustomAuthHeader () |

| Gets CustomAuthHeader value More... | |

| string | GetApiVersion () |

| Gets apiVersion value More... | |

| int | GetBestOf () |

| Gets bestOf value More... | |

| int | GetCacheLevel () |

| Gets cacheLevel value More... | |

| int | GetConcurrency () |

| Gets concurrency value More... | |

| double | GetConcurrentTimeout () |

| Gets concurrentTimeout value More... | |

| string | GetDeploymentName () |

| Gets deploymentName value More... | |

| bool | GetEcho () |

| Gets echo value More... | |

| string | GetErrorCol () |

| Gets errorCol value More... | |

| double | GetFrequencyPenalty () |

| Gets frequencyPenalty value More... | |

| object | GetHandler () |

| Gets handler value More... | |

| int | GetLogProbs () |

| Gets logProbs value More... | |

| int | GetMaxTokens () |

| Gets maxTokens value More... | |

| string | GetMessagesCol () |

| Gets messagesCol value More... | |

| int | GetN () |

| Gets n value More... | |

| string | GetOutputCol () |

| Gets outputCol value More... | |

| double | GetPresencePenalty () |

| Gets presencePenalty value More... | |

| string | GetStop () |

| Gets stop value More... | |

| string | GetSubscriptionKey () |

| Gets subscriptionKey value More... | |

| double | GetTemperature () |

| Gets temperature value More... | |

| double | GetTimeout () |

| Gets timeout value More... | |

| double | GetTopP () |

| Gets topP value More... | |

| string | GetUrl () |

| Gets url value More... | |

| string | GetUser () |

| Gets user value More... | |

| void | Save (string path) |

| Saves the object so that it can be loaded later using Load. Note that these objects can be shared with Scala by Loading or Saving in Scala. More... | |

| JavaMLWriter | Write () |

| |

| JavaMLReader< OpenAIChatCompletion > | Read () |

| Get the corresponding JavaMLReader instance. More... | |

| OpenAIChatCompletion | SetCustomServiceName (string value) |

| Sets value for service name More... | |

| OpenAIChatCompletion | SetEndpoint (string value) |

| Sets value for endpoint More... | |

Static Public Member Functions | |

| static OpenAIChatCompletion | Load (string path) |

| Loads the OpenAIChatCompletion that was previously saved using Save(string). More... | |

Detailed Description

OpenAIChatCompletion implements OpenAIChatCompletion

Constructor & Destructor Documentation

◆ OpenAIChatCompletion() [1/2]

|

inline |

Creates a OpenAIChatCompletion without any parameters.

◆ OpenAIChatCompletion() [2/2]

|

inline |

Creates a OpenAIChatCompletion with a UID that is used to give the OpenAIChatCompletion a unique ID.

- Parameters

-

uid An immutable unique ID for the object and its derivatives.

Member Function Documentation

◆ GetAADToken()

| string Synapse.ML.Services.Openai.OpenAIChatCompletion.GetAADToken | ( | ) |

Gets AADToken value

- Returns

- AADToken: AAD Token used for authentication

◆ GetApiVersion()

| string Synapse.ML.Services.Openai.OpenAIChatCompletion.GetApiVersion | ( | ) |

Gets apiVersion value

- Returns

- apiVersion: version of the api

◆ GetBestOf()

| int Synapse.ML.Services.Openai.OpenAIChatCompletion.GetBestOf | ( | ) |

Gets bestOf value

- Returns

- bestOf: How many generations to create server side, and display only the best. Will not stream intermediate progress if best_of > 1. Has maximum value of 128.

◆ GetCacheLevel()

| int Synapse.ML.Services.Openai.OpenAIChatCompletion.GetCacheLevel | ( | ) |

Gets cacheLevel value

- Returns

- cacheLevel: can be used to disable any server-side caching, 0=no cache, 1=prompt prefix enabled, 2=full cache

◆ GetConcurrency()

| int Synapse.ML.Services.Openai.OpenAIChatCompletion.GetConcurrency | ( | ) |

Gets concurrency value

- Returns

- concurrency: max number of concurrent calls

◆ GetConcurrentTimeout()

| double Synapse.ML.Services.Openai.OpenAIChatCompletion.GetConcurrentTimeout | ( | ) |

Gets concurrentTimeout value

- Returns

- concurrentTimeout: max number seconds to wait on futures if concurrency >= 1

◆ GetCustomAuthHeader()

| string Synapse.ML.Services.Openai.OpenAIChatCompletion.GetCustomAuthHeader | ( | ) |

Gets CustomAuthHeader value

- Returns

- CustomAuthHeader: A Custom Value for Authorization Header

◆ GetDeploymentName()

| string Synapse.ML.Services.Openai.OpenAIChatCompletion.GetDeploymentName | ( | ) |

Gets deploymentName value

- Returns

- deploymentName: The name of the deployment

◆ GetEcho()

| bool Synapse.ML.Services.Openai.OpenAIChatCompletion.GetEcho | ( | ) |

Gets echo value

- Returns

- echo: Echo back the prompt in addition to the completion

◆ GetErrorCol()

| string Synapse.ML.Services.Openai.OpenAIChatCompletion.GetErrorCol | ( | ) |

Gets errorCol value

- Returns

- errorCol: column to hold http errors

◆ GetFrequencyPenalty()

| double Synapse.ML.Services.Openai.OpenAIChatCompletion.GetFrequencyPenalty | ( | ) |

Gets frequencyPenalty value

- Returns

- frequencyPenalty: How much to penalize new tokens based on whether they appear in the text so far. Increases the likelihood of the model to talk about new topics.

◆ GetHandler()

| object Synapse.ML.Services.Openai.OpenAIChatCompletion.GetHandler | ( | ) |

Gets handler value

- Returns

- handler: Which strategy to use when handling requests

◆ GetLogProbs()

| int Synapse.ML.Services.Openai.OpenAIChatCompletion.GetLogProbs | ( | ) |

Gets logProbs value

- Returns

- logProbs: Include the log probabilities on the

logprobsmost likely tokens, as well the chosen tokens. So for example, iflogprobsis 10, the API will return a list of the 10 most likely tokens. Iflogprobsis 0, only the chosen tokens will have logprobs returned. Minimum of 0 and maximum of 100 allowed.

◆ GetMaxTokens()

| int Synapse.ML.Services.Openai.OpenAIChatCompletion.GetMaxTokens | ( | ) |

Gets maxTokens value

- Returns

- maxTokens: The maximum number of tokens to generate. Has minimum of 0.

◆ GetMessagesCol()

| string Synapse.ML.Services.Openai.OpenAIChatCompletion.GetMessagesCol | ( | ) |

Gets messagesCol value

- Returns

- messagesCol: The column messages to generate chat completions for, in the chat format. This column should have type Array(Struct(role: String, content: String)).

◆ GetN()

| int Synapse.ML.Services.Openai.OpenAIChatCompletion.GetN | ( | ) |

Gets n value

- Returns

- n: How many snippets to generate for each prompt. Minimum of 1 and maximum of 128 allowed.

◆ GetOutputCol()

| string Synapse.ML.Services.Openai.OpenAIChatCompletion.GetOutputCol | ( | ) |

Gets outputCol value

- Returns

- outputCol: The name of the output column

◆ GetPresencePenalty()

| double Synapse.ML.Services.Openai.OpenAIChatCompletion.GetPresencePenalty | ( | ) |

Gets presencePenalty value

- Returns

- presencePenalty: How much to penalize new tokens based on their existing frequency in the text so far. Decreases the likelihood of the model to repeat the same line verbatim. Has minimum of -2 and maximum of 2.

◆ GetStop()

| string Synapse.ML.Services.Openai.OpenAIChatCompletion.GetStop | ( | ) |

Gets stop value

- Returns

- stop: A sequence which indicates the end of the current document.

◆ GetSubscriptionKey()

| string Synapse.ML.Services.Openai.OpenAIChatCompletion.GetSubscriptionKey | ( | ) |

Gets subscriptionKey value

- Returns

- subscriptionKey: the API key to use

◆ GetTemperature()

| double Synapse.ML.Services.Openai.OpenAIChatCompletion.GetTemperature | ( | ) |

Gets temperature value

- Returns

- temperature: What sampling temperature to use. Higher values means the model will take more risks. Try 0.9 for more creative applications, and 0 (argmax sampling) for ones with a well-defined answer. We generally recommend using this or

top_pbut not both. Minimum of 0 and maximum of 2 allowed.

◆ GetTimeout()

| double Synapse.ML.Services.Openai.OpenAIChatCompletion.GetTimeout | ( | ) |

Gets timeout value

- Returns

- timeout: number of seconds to wait before closing the connection

◆ GetTopP()

| double Synapse.ML.Services.Openai.OpenAIChatCompletion.GetTopP | ( | ) |

Gets topP value

- Returns

- topP: An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass. So 0.1 means only the tokens comprising the top 10 percent probability mass are considered. We generally recommend using this or

temperaturebut not both. Minimum of 0 and maximum of 1 allowed.

◆ GetUrl()

| string Synapse.ML.Services.Openai.OpenAIChatCompletion.GetUrl | ( | ) |

Gets url value

- Returns

- url: Url of the service

◆ GetUser()

| string Synapse.ML.Services.Openai.OpenAIChatCompletion.GetUser | ( | ) |

Gets user value

- Returns

- user: The ID of the end-user, for use in tracking and rate-limiting.

◆ Load()

|

static |

Loads the OpenAIChatCompletion that was previously saved using Save(string).

- Parameters

-

path The path the previous OpenAIChatCompletion was saved to

- Returns

- New OpenAIChatCompletion object, loaded from path.

◆ Read()

| JavaMLReader<OpenAIChatCompletion> Synapse.ML.Services.Openai.OpenAIChatCompletion.Read | ( | ) |

Get the corresponding JavaMLReader instance.

- Returns

- an JavaMLReader<OpenAIChatCompletion> instance for this ML instance.

◆ Save()

| void Synapse.ML.Services.Openai.OpenAIChatCompletion.Save | ( | string | path | ) |

Saves the object so that it can be loaded later using Load. Note that these objects can be shared with Scala by Loading or Saving in Scala.

- Parameters

-

path The path to save the object to

◆ SetAADToken()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetAADToken | ( | string | value | ) |

Sets value for AADToken

- Parameters

-

value AAD Token used for authentication

- Returns

- New OpenAIChatCompletion object

◆ SetAADTokenCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetAADTokenCol | ( | string | value | ) |

Sets value for AADToken column

- Parameters

-

value AAD Token used for authentication

- Returns

- New OpenAIChatCompletion object

◆ SetApiVersion()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetApiVersion | ( | string | value | ) |

◆ SetApiVersionCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetApiVersionCol | ( | string | value | ) |

Sets value for apiVersion column

- Parameters

-

value version of the api

- Returns

- New OpenAIChatCompletion object

◆ SetBestOf()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetBestOf | ( | int | value | ) |

Sets value for bestOf

- Parameters

-

value How many generations to create server side, and display only the best. Will not stream intermediate progress if best_of > 1. Has maximum value of 128.

- Returns

- New OpenAIChatCompletion object

◆ SetBestOfCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetBestOfCol | ( | string | value | ) |

Sets value for bestOf column

- Parameters

-

value How many generations to create server side, and display only the best. Will not stream intermediate progress if best_of > 1. Has maximum value of 128.

- Returns

- New OpenAIChatCompletion object

◆ SetCacheLevel()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetCacheLevel | ( | int | value | ) |

Sets value for cacheLevel

- Parameters

-

value can be used to disable any server-side caching, 0=no cache, 1=prompt prefix enabled, 2=full cache

- Returns

- New OpenAIChatCompletion object

◆ SetCacheLevelCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetCacheLevelCol | ( | string | value | ) |

Sets value for cacheLevel column

- Parameters

-

value can be used to disable any server-side caching, 0=no cache, 1=prompt prefix enabled, 2=full cache

- Returns

- New OpenAIChatCompletion object

◆ SetConcurrency()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetConcurrency | ( | int | value | ) |

Sets value for concurrency

- Parameters

-

value max number of concurrent calls

- Returns

- New OpenAIChatCompletion object

◆ SetConcurrentTimeout()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetConcurrentTimeout | ( | double | value | ) |

Sets value for concurrentTimeout

- Parameters

-

value max number seconds to wait on futures if concurrency >= 1

- Returns

- New OpenAIChatCompletion object

◆ SetCustomAuthHeader()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetCustomAuthHeader | ( | string | value | ) |

Sets value for CustomAuthHeader

- Parameters

-

value A Custom Value for Authorization Header

- Returns

- New OpenAIChatCompletion object

◆ SetCustomAuthHeaderCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetCustomAuthHeaderCol | ( | string | value | ) |

Sets value for CustomAuthHeader column

- Parameters

-

value A Custom Value for Authorization Header

- Returns

- New OpenAIChatCompletion object

◆ SetCustomServiceName()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetCustomServiceName | ( | string | value | ) |

Sets value for service name

- Parameters

-

value Service name of the cognitive service if it's custom domain

- Returns

- New OpenAIChatCompletion object

◆ SetDeploymentName()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetDeploymentName | ( | string | value | ) |

Sets value for deploymentName

- Parameters

-

value The name of the deployment

- Returns

- New OpenAIChatCompletion object

◆ SetDeploymentNameCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetDeploymentNameCol | ( | string | value | ) |

Sets value for deploymentName column

- Parameters

-

value The name of the deployment

- Returns

- New OpenAIChatCompletion object

◆ SetEcho()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetEcho | ( | bool | value | ) |

Sets value for echo

- Parameters

-

value Echo back the prompt in addition to the completion

- Returns

- New OpenAIChatCompletion object

◆ SetEchoCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetEchoCol | ( | string | value | ) |

Sets value for echo column

- Parameters

-

value Echo back the prompt in addition to the completion

- Returns

- New OpenAIChatCompletion object

◆ SetEndpoint()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetEndpoint | ( | string | value | ) |

Sets value for endpoint

- Parameters

-

value Endpoint of the cognitive service

- Returns

- New OpenAIChatCompletion object

◆ SetErrorCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetErrorCol | ( | string | value | ) |

Sets value for errorCol

- Parameters

-

value column to hold http errors

- Returns

- New OpenAIChatCompletion object

◆ SetFrequencyPenalty()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetFrequencyPenalty | ( | double | value | ) |

Sets value for frequencyPenalty

- Parameters

-

value How much to penalize new tokens based on whether they appear in the text so far. Increases the likelihood of the model to talk about new topics.

- Returns

- New OpenAIChatCompletion object

◆ SetFrequencyPenaltyCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetFrequencyPenaltyCol | ( | string | value | ) |

Sets value for frequencyPenalty column

- Parameters

-

value How much to penalize new tokens based on whether they appear in the text so far. Increases the likelihood of the model to talk about new topics.

- Returns

- New OpenAIChatCompletion object

◆ SetHandler()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetHandler | ( | object | value | ) |

Sets value for handler

- Parameters

-

value Which strategy to use when handling requests

- Returns

- New OpenAIChatCompletion object

◆ SetLogProbs()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetLogProbs | ( | int | value | ) |

Sets value for logProbs

- Parameters

-

value Include the log probabilities on the logprobsmost likely tokens, as well the chosen tokens. So for example, iflogprobsis 10, the API will return a list of the 10 most likely tokens. Iflogprobsis 0, only the chosen tokens will have logprobs returned. Minimum of 0 and maximum of 100 allowed.

- Returns

- New OpenAIChatCompletion object

◆ SetLogProbsCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetLogProbsCol | ( | string | value | ) |

Sets value for logProbs column

- Parameters

-

value Include the log probabilities on the logprobsmost likely tokens, as well the chosen tokens. So for example, iflogprobsis 10, the API will return a list of the 10 most likely tokens. Iflogprobsis 0, only the chosen tokens will have logprobs returned. Minimum of 0 and maximum of 100 allowed.

- Returns

- New OpenAIChatCompletion object

◆ SetMaxTokens()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetMaxTokens | ( | int | value | ) |

Sets value for maxTokens

- Parameters

-

value The maximum number of tokens to generate. Has minimum of 0.

- Returns

- New OpenAIChatCompletion object

◆ SetMaxTokensCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetMaxTokensCol | ( | string | value | ) |

Sets value for maxTokens column

- Parameters

-

value The maximum number of tokens to generate. Has minimum of 0.

- Returns

- New OpenAIChatCompletion object

◆ SetMessagesCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetMessagesCol | ( | string | value | ) |

Sets value for messagesCol

- Parameters

-

value The column messages to generate chat completions for, in the chat format. This column should have type Array(Struct(role: String, content: String)).

- Returns

- New OpenAIChatCompletion object

◆ SetN()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetN | ( | int | value | ) |

Sets value for n

- Parameters

-

value How many snippets to generate for each prompt. Minimum of 1 and maximum of 128 allowed.

- Returns

- New OpenAIChatCompletion object

◆ SetNCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetNCol | ( | string | value | ) |

Sets value for n column

- Parameters

-

value How many snippets to generate for each prompt. Minimum of 1 and maximum of 128 allowed.

- Returns

- New OpenAIChatCompletion object

◆ SetOutputCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetOutputCol | ( | string | value | ) |

Sets value for outputCol

- Parameters

-

value The name of the output column

- Returns

- New OpenAIChatCompletion object

◆ SetPresencePenalty()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetPresencePenalty | ( | double | value | ) |

Sets value for presencePenalty

- Parameters

-

value How much to penalize new tokens based on their existing frequency in the text so far. Decreases the likelihood of the model to repeat the same line verbatim. Has minimum of -2 and maximum of 2.

- Returns

- New OpenAIChatCompletion object

◆ SetPresencePenaltyCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetPresencePenaltyCol | ( | string | value | ) |

Sets value for presencePenalty column

- Parameters

-

value How much to penalize new tokens based on their existing frequency in the text so far. Decreases the likelihood of the model to repeat the same line verbatim. Has minimum of -2 and maximum of 2.

- Returns

- New OpenAIChatCompletion object

◆ SetStop()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetStop | ( | string | value | ) |

Sets value for stop

- Parameters

-

value A sequence which indicates the end of the current document.

- Returns

- New OpenAIChatCompletion object

◆ SetStopCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetStopCol | ( | string | value | ) |

Sets value for stop column

- Parameters

-

value A sequence which indicates the end of the current document.

- Returns

- New OpenAIChatCompletion object

◆ SetSubscriptionKey()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetSubscriptionKey | ( | string | value | ) |

Sets value for subscriptionKey

- Parameters

-

value the API key to use

- Returns

- New OpenAIChatCompletion object

◆ SetSubscriptionKeyCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetSubscriptionKeyCol | ( | string | value | ) |

Sets value for subscriptionKey column

- Parameters

-

value the API key to use

- Returns

- New OpenAIChatCompletion object

◆ SetTemperature()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetTemperature | ( | double | value | ) |

Sets value for temperature

- Parameters

-

value What sampling temperature to use. Higher values means the model will take more risks. Try 0.9 for more creative applications, and 0 (argmax sampling) for ones with a well-defined answer. We generally recommend using this or top_pbut not both. Minimum of 0 and maximum of 2 allowed.

- Returns

- New OpenAIChatCompletion object

◆ SetTemperatureCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetTemperatureCol | ( | string | value | ) |

Sets value for temperature column

- Parameters

-

value What sampling temperature to use. Higher values means the model will take more risks. Try 0.9 for more creative applications, and 0 (argmax sampling) for ones with a well-defined answer. We generally recommend using this or top_pbut not both. Minimum of 0 and maximum of 2 allowed.

- Returns

- New OpenAIChatCompletion object

◆ SetTimeout()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetTimeout | ( | double | value | ) |

Sets value for timeout

- Parameters

-

value number of seconds to wait before closing the connection

- Returns

- New OpenAIChatCompletion object

◆ SetTopP()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetTopP | ( | double | value | ) |

Sets value for topP

- Parameters

-

value An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass. So 0.1 means only the tokens comprising the top 10 percent probability mass are considered. We generally recommend using this or temperaturebut not both. Minimum of 0 and maximum of 1 allowed.

- Returns

- New OpenAIChatCompletion object

◆ SetTopPCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetTopPCol | ( | string | value | ) |

Sets value for topP column

- Parameters

-

value An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass. So 0.1 means only the tokens comprising the top 10 percent probability mass are considered. We generally recommend using this or temperaturebut not both. Minimum of 0 and maximum of 1 allowed.

- Returns

- New OpenAIChatCompletion object

◆ SetUrl()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetUrl | ( | string | value | ) |

◆ SetUser()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetUser | ( | string | value | ) |

Sets value for user

- Parameters

-

value The ID of the end-user, for use in tracking and rate-limiting.

- Returns

- New OpenAIChatCompletion object

◆ SetUserCol()

| OpenAIChatCompletion Synapse.ML.Services.Openai.OpenAIChatCompletion.SetUserCol | ( | string | value | ) |

Sets value for user column

- Parameters

-

value The ID of the end-user, for use in tracking and rate-limiting.

- Returns

- New OpenAIChatCompletion object

The documentation for this class was generated from the following file:

- synapse/ml/services/openai/OpenAIChatCompletion.cs

1.8.17

1.8.17